Must Read

META. Small toy figures are seen in front of Meta's logo in this illustration taken, October 28, 2021.META. Small toy figures are seen in front of Meta's logo in this illustration taken, October 28, 2021.

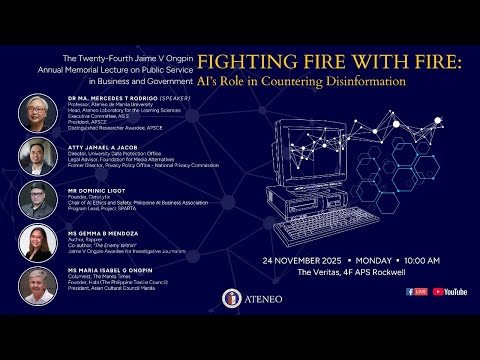

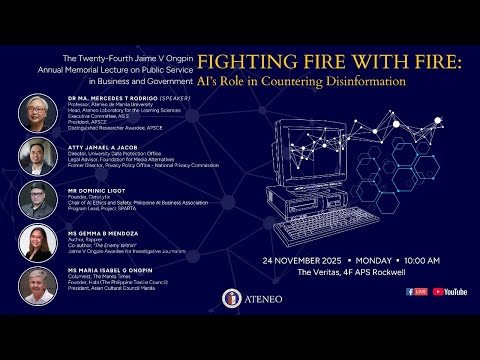

24th Jaime V. Ongpin lecture focuses on fighting disinformation in the age of AI

MANILA, Philippines – Artificial intelligence (AI) can both be a potential vector for spreading and a means of fighting disinformation, according to experts who spoke at the 24th Jaime V. Ongpin Annual Memorial Lecture at the Ateneo Professional Schools on Monday, November 24

In her keynote speech, Professor Maria Mercedes Rodrigo, head of the Ateneo Laboratory for the Learning Sciences, said that “AI has accelerated and democratized the capacity to create deepfakes — synthetic text, images, audio, video of events that never took place.”

She added, “Generative AI has the potential to inundate media with deepfakes, misinformation, and synthetic content, and the Philippines has been designated patient zero in the context of global disinformation largely attributable to the significant prevalence of misinformation during the 2016 Philippine elections.”

The study said 14 experts — six industry professionals, three academics, three civil society members, and two members of the media — were gathered in a series of interviews for the purpose of the study.

Within the industry, the two most common applications of AI were fraud detection and anti-money laundering campaigns, as well as personalized marketing campaigns.

In her speech, Rodrigo cited the need for regulatory policies and a code of ethics governing AI use. Industry and academic key informants, she said, affirmed the need for ethical and regulatory policies to ensure AI safety.

Meanwhile, “users and creators of AI applications need to be cognizant of safety, security, and intellectual property implications.”

“Based on our interviews, we can see that we as a country have the internal capacity and expertise. We have the education and the awareness, What we seem to lack is scale, and what we need is scale,” Rodrigo said.

To cap off her speech, Rodrigo mentioned some of the proposed remedies in the study, which focused on combating disinformation through effective implementation of governance structures, policy adaptations, and collaboration across sectors.

These included a call for a national AI governance framework, aligning with the Department of Trade and Industry’s National AI Strategy Roadmap 2.0, as well as promoting media literacy and public awareness regarding AI as a tool and a means of spreading disinformation to the unwary.

Play Video

Panel of reactors

A panel of reactors was also on hand to give remarks regarding the possibilities and pitfalls of artificial intelligence. Alongside Rodrigo were lawyer Jamael Jacob, director of the university’s data protection office; Dominic Ligot, the founder of Cirrolytix and the chair of AI ethics and safety at the Philippine AI Business Association; and, Gemma Mendoza, Rappler’s head of digital services and lead researcher on disinformation and platforms.

According to Jacob, critics and proponents of AI both readily admit that AI isn’t perfect, and makes mistakes or “hallucinates.” As such, the jury is still out on using AI to combat the bad uses of AI.

He added that transparency was crucial to ascribing responsibility for AI’s uses and any potential missteps, though calls for transparency and accountability are often rejected as AI’s algorithms are treated as trade secrets by big technology companies.

For Ligot, meanwhile, his position is that AI is neither a savior nor a villain, and that we must treat deepfakes as a present operational threat rather than a distant one. Ligot also stood for limiting the reach of bad actors rather than policing free expression by detecting the coordinated spread of falsehoods. The reach of paid disinformation, he explained, vastly outpaces truth, with disinformation amplified by design and more readily going viral.

Mendoza discussed the accountability of tech companies to labeling fake content. She mentioned how a rise in synthetic content and AI slop — especially deepfakes like hyperrealistic video content — has drowned out real content on social media feeds. This is made worse by the existence of well-used but still little-known AI tools to generate deepfakes.

She mentioned how platforms profit from the spread of deepfakes, with a Reuters report mentioning that, based on internal documents, Meta earns a fortune on fraudulent ads by charging suspected fraudsters more to be amplified rather than investigating the source of the fraud.

Mendoza added, “While innovation should be welcomed, guard rails should be in place and platforms should not be allowed to profit from content theft. This will ultimately kill that rich marketplace of information, art, and culture that AI is currently benefiting from.”

The study by Rodrigo, Rommel Jude Ong, Karen Claire Garcia, Charisse Erinn Flores, and Johanna Marion Torres is available to download for free on this page. It was funded by the Konrad Adenauer Stiftung Foundation. – Rappler.com

Market Opportunity

null Price(null)

--

----

USD

null (null) Live Price Chart

Disclaimer: The articles reposted on this site are sourced from public platforms and are provided for informational purposes only. They do not necessarily reflect the views of MEXC. All rights remain with the original authors. If you believe any content infringes on third-party rights, please contact service@support.mexc.com for removal. MEXC makes no guarantees regarding the accuracy, completeness, or timeliness of the content and is not responsible for any actions taken based on the information provided. The content does not constitute financial, legal, or other professional advice, nor should it be considered a recommendation or endorsement by MEXC.

You May Also Like

NGP Token Crashes 88% After $2M Oracle Hack

The post NGP Token Crashes 88% After $2M Oracle Hack appeared on BitcoinEthereumNews.com. Key Notes The attacker stole ~$2 million worth of ETH from the New Gold Protocol on Sept.18. The exploit involved a flash loan that successfully manipulated the price oracle enabling the attacker to bypass security checks in the smart contract. The NGP token is down 88% as the attacker obfuscates their funds through Tornado Cash. New Gold Protocol, a DeFi staking project, lost around 443.8 Ethereum ETH $4 599 24h volatility: 2.2% Market cap: $555.19 B Vol. 24h: $42.83 B , valued at $2 million, in an exploit on Sept 18. The attack caused the project’s native NGP token to crash by 88%, wiping out most of its market value in less than an hour. The incident was flagged by multiple blockchain security firms, including PeckShield and Blockaid. Both firms confirmed the amount stolen and tracked the movement of the funds. Blockaid’s analysis identified the specific vulnerability that the attacker used. 🚨 Community Alert: Blockaid’s exploit detection system identified multiple malicious transactions targeting the NGP token on BSC. Roughly $2M has been drained. ↓ We’re monitoring in real time and will share updates below pic.twitter.com/efxXma0REQ — Blockaid (@blockaid_) September 17, 2025 Flash Loan Attack Manipulated Price Oracle According to the Blockaid report, the hack was a price oracle manipulation attack. The protocol’s smart contract had a critical flaw; it determined the NGP token’s price by looking at the asset reserves in a single Uniswap liquidity pool. This method is insecure because a single pool’s price can be easily manipulated. The attacker used a flash loan to borrow a large amount of assets. A flash loan consists of a series of transactions that borrow and return a loan within the same transaction. They used these assets to temporarily skew the reserves in the liquidity pool, tricking the protocol into thinking the…

Share

BitcoinEthereumNews2025/09/18 19:04

CZ Defends HODL Strategy Amid Backlash, Yi He’s 94% BNB Allocation Revealed

The post CZ Defends HODL Strategy Amid Backlash, Yi He’s 94% BNB Allocation Revealed appeared on BitcoinEthereumNews.com. Zach Anderson Jan 29, 2026 10:00 Binance

Share

BitcoinEthereumNews2026/01/30 09:19

Nvidia shares fall 3%

The post Nvidia shares fall 3% appeared on BitcoinEthereumNews.com. Home » AI » Nvidia shares fall 3% Chipmaker extends decline as investors continue to take profits from recent highs. Photo: Budrul Chukrut/SOPA Images/LightRocket via Getty Images Key Takeaways Nvidia’s stock decreased by 3% today. The decline extends Nvidia’s recent losing streak. Nvidia shares fell 3% today, extending the chipmaker’s recent decline. The stock dropped further during trading as the artificial intelligence chip leader continued its pullback from recent highs. Disclaimer Source: https://cryptobriefing.com/nvidia-shares-fall-2-8/

Share

BitcoinEthereumNews2025/09/18 03:13