Bitcoin encryption isn’t at risk from quantum computers for one simple reason: it doesn’t actually exist

Contrary to popular belief, quantum computers will not “crack” Bitcoin encryption; instead, any realistic threat would focus on exploiting digital signatures tied to exposed public keys.

Quantum computers cannot decrypt Bitcoin because it stores no encrypted secrets on-chain.

Ownership is enforced by digital signatures and hash-based commitments, not ciphertext.

The quantum risk that matters is the risk of authorization forgery.

If a cryptographically relevant quantum computer can run Shor’s algorithm against Bitcoin’s elliptic-curve cryptography, it could derive a private key from an on-chain public key and then produce a valid signature for a competing spend.

Much of the “quantum breaks Bitcoin encryption” framing is a terminology error. Adam Back, longtime Bitcoin developer and Hashcash inventor, summed it up on X:

A separate post made the same distinction more explicitly, noting that a quantum attacker would not “decrypt” anything, but would instead use Shor’s algorithm to derive a private key from an exposed public key:

Why public-key exposure, not encryption, is Bitcoin’s real security bottleneck

Bitcoin’s signature systems, ECDSA and Schnorr, are used to prove control over a keypair.

In that model, coins are taken by producing a signature that the network will accept.

That is why public-key exposure is the pivot.

Whether an output is exposed depends on what appears on-chain.

Many address formats commit to a hash of a public key, so the raw public key is not revealed until the transaction is spent.

That narrows the window for an attacker to compute a private key and publish a conflicting transaction.

Other script types expose a public key earlier, and address reuse can turn a one-time reveal into a persistent target.

Project Eleven’s open-source “Bitcoin Risq List” query defines exposure at the script and reuse level.

It maps where a public key is already available to a would-be Shor attacker.

Why quantum risk is measurable today, even if it isn’t imminent

Taproot changes the exposure pattern in a way that matters only if large fault-tolerant machines arrive.

Taproot outputs (P2TR) include a 32-byte tweaked public key in the output program, rather than a pubkey hash, as described in BIP 341.

Project Eleven’s query documentation includes P2TR alongside pay-to-pubkey and some multisig forms as categories where public keys are visible in outputs.

That does not create a new vulnerability today.

However, it changes what becomes exposed by default if key recovery becomes feasible.

Because exposure is measurable, the vulnerable pool can be tracked today without pinning down a quantum timeline.

Project Eleven says it runs an automated weekly scan and publishes a “Bitcoin Risq List” concept intended to cover every quantum-vulnerable address and its balance, detailed in its methodology post.

Its public tracker shows a headline figure of about 6.7 million BTC that meet its exposure criteria.

| Quantity | Order of magnitude | Source |

|---|---|---|

| BTC in “quantum-vulnerable” addresses (public key exposed) | ~6.7M BTC | Project Eleven |

| Logical qubits for 256-bit prime-field ECC discrete log (upper bound) | ~2,330 logical qubits | Roetteler et al. |

| Physical-qubit scale example tied to a 10-minute key-recovery setup | ~6.9M physical qubits | Litinski |

| Physical-qubit scale reference tied to a 1-day key-recovery setup | ~13M physical qubits | Schneier on Security |

On the computational side, the key distinction is between logical qubits and physical qubits.

In the paper “Quantum resource estimates for computing elliptic curve discrete logarithms,” Roetteler and co-authors give an upper bound of at most 9n + 2⌈log2(n)⌉ + 10 logical qubits to compute an elliptic-curve discrete logarithm over an n-bit prime field.

For n = 256, that works out to about 2,330 logical qubits.

Converting that into an error-corrected machine that can run a deep circuit at low failure rates is where physical-qubit overhead and timing dominate.

Architecture choices then set a wide range of runtimes

Litinski’s 2023 estimate puts a 256-bit elliptic-curve private-key computation at about 50 million Toffoli gates.

Under its assumptions, a modular approach could compute one key in about 10 minutes using about 6.9 million physical qubits.

In a Schneier on Security summary of related work, estimates cluster around 13 million physical qubits to break within one day.

The same line of estimates also cites about 317 million physical qubits to target a one-hour window, depending on timing and error-rate assumptions.

For Bitcoin operations, the nearer levers are behavioral and protocol-level.

Address reuse raises exposure, and wallet design can reduce it.

Project Eleven’s wallet analysis notes that once a public key is on-chain, future receipts back to that same address remain exposed.

If key recovery ever fit inside a block interval, an attacker would be racing spends from exposed outputs, not rewriting consensus history.

Hashing is often bundled into the narrative, but the quantum lever there is Grover’s algorithm.

Grover provides a square-root speedup for brute-force search rather than the discrete-log break Shor provides.

NIST research on the practical cost of Grover-style attacks stresses that overhead and error correction shape system-level cost.

In the idealized model, for SHA-256 preimages, the target remains on the order of 2^128 work after Grover.

That is not comparable to an ECC discrete-log break.

That leaves signature migration, where the constraints are bandwidth, storage, fees, and coordination.

Post-quantum signatures are often kilobytes rather than the tens of bytes users are accustomed to.

That changes transaction weight economics and wallet UX.

Why quantum risk is a migration challenge, not an immediate threat

Outside Bitcoin, NIST has standardized post-quantum primitives such as ML-KEM (FIPS 203) as part of broader migration planning.

Inside Bitcoin, BIP 360 proposes a “Pay to Quantum Resistant Hash” output type.

Meanwhile, qbip.org argues for a legacy-signature sunset to force migration incentives and reduce the long tail of exposed keys.

Recent corporate roadmaps add context for why the topic is framed as infrastructure rather than an emergency.

In a recent Reuters report, IBM discussed progress on error-correction components and reiterated a path toward a fault-tolerant system around 2029.

Reuters also covered IBM’s claim that a key quantum error-correction algorithm can run on conventional AMD chips, in a separate report.

In that framing, “quantum breaks Bitcoin encryption” fails on terminology and on mechanics.

The measurable items are how much of the UTXO set has exposed public keys, how wallet behavior changes in response to that exposure, and how quickly the network can adopt quantum-resistant spending paths while keeping validation and fee-market constraints intact.

The post Bitcoin encryption isn’t at risk from quantum computers for one simple reason: it doesn’t actually exist appeared first on CryptoSlate.

You May Also Like

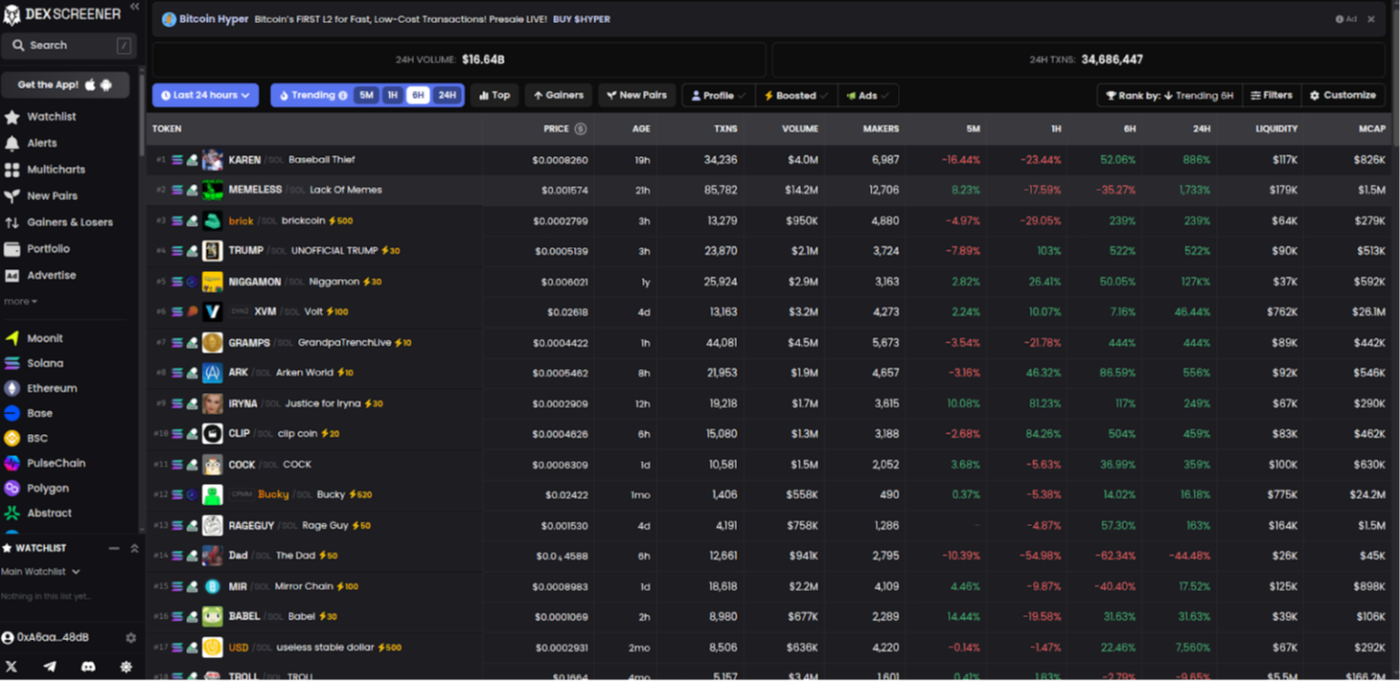

Building a DEXScreener Clone: A Step-by-Step Guide

Which DOGE? Musk's Cryptic Post Explodes Confusion